Certified Argo Project Associate (CAPA) Exam Study Guide

I recently passed the Certified Argo Project Associate (CAPA) exam and wanted to share a study guide to help you prepare for the exam. If you are not already aware, the Cloud Native Computing Foundation (CNCF) offers a suite of certifications that validate your knowledge and expertise in cloud-native technologies, with Argo being one of them.

The CAPA exam is designed for beginners who are new to Argo and its suite of tools which includes Argo Workflows, Argo CD, Argo Rollouts, and Argo Events.

But be warned… even though it says it’s for beginners, it can be a bit intimidating if you’ve never worked with these tools before. So in this study guide, I’ll try to cover key topics you should be familiar and include some resources and practical exercises to help you prepare for the exam

Good news is the exam is multiple choice so it’s not as rigorous as the Kubernetes exams where you have to perform tasks in a live server environment; however, you are expected to have a good bit of understanding on how to use these tools including detailed knowledge of some of their Custom Resource Definition (CRD) specifications.

You have 90 minutes to complete the exam, and when you pass (I know you will ☺️), the certification is valid for 2 years. If you don’t pass on the first try… no sweat, you do get one free retake 😅

As documented on the CAPA exam page, here is a breakdown of the exam topics and the percentage of questions you can expect from each section:

Let’s now dig into each of the topics and provide high-level summaries of what you should know for each section. I’ll also include some resources and practical exercises to help you prepare for the exam 💪

Argo Workflows 36%

Argo Workflows is an open-source cloud-native workflow engine for orchestrating jobs on Kubernetes. Like all the other projects in the Argo suite it’s implemented as an extension to Kubernetes following the Operator pattern and provides a powerful way to define complex workflows using YAML.

Understand Argo Workflow Fundamentals

When you install Argo Workflows, you get a Workflow Controller which is reponsible for reconciling the desired state of the Workflow CRD and an Argo Server which is responsible for serving the API requests.

Here is a high-level overview of the Argo Workflow architecture:

Source: https://argo-workflows.readthedocs.io/en/latest/architecture/

Source: https://argo-workflows.readthedocs.io/en/latest/architecture/

The Workflow CRD is the primary resource you will interact with to define your workflows. It allows you to define a sequence of tasks and dependencies between tasks. It also is reponsible for storing the state of the workflow.

The Workflow spec contains the following key attributes:

- entrypoint is the name of the template that will be executed first

- templates is a list of templates that define the tasks in the workflow

Check out this example of a basic workflow with some defaults.

The template is where tasks are defined to be run in the workflow. A template can be one of the following task types:

- container is a task that runs a container. This is useful when you want to run a task that is already containerized.

- script is a task that gives you a bit of flexibility to run a script inside a container. This is useful when you need to run a script but don’t want to create a separate container image.

- resource is a task that allows you to perform operations on cluster resources. This can be used to get, create, apply, delete, replace, or patch resources. This is useful when you need to interact with Kubernetes resources.

- suspend is a task that allows you to pause the workflow until it is manually resumed. This is useful when you need to wait for a manual approval or intervention.

- plugin is a task that allows you to run an external plugin. This is useful when you need to run a task that is not supported by the built-in templates.

- containerset is a task that allows you to run multiple containers within a single pod. This is useful when you need to run multiple containers that share the same network namespace, IPC namespace, and PID namespace.

- http is a task that allows you to make HTTP requests. This is useful when you need to interact with external services.

Be sure to read through each of the template type field references to understand how to define and take a look at some of the examples to see how they are used.

You can also control the order in which tasks are run with template invocators. This defines the execution flow of the workflow and there are two types of invocators:

- steps is a of tasks that are executed in order. Steps can be nested to create a hierarchy of tasks and outer steps are run sequentially while inner steps are run in parallel. However, you can get more control over the execution of the inner steps by using the synchronization field.

- dag is a directed acyclic graph of templates that are executed based on dependencies. This is useful when you need to run tasks in parallel and define dependencies between tasks; especially when you tasks that rely on the output of other tasks.

Generating and Consuming Artifacts

Artifacts can be consumed by tasks or be outputs of tasks which in turn can be consumed by other tasks.

Check out this example of a workflow that generates and consumes artifacts

To output an artifact, you can use the outputs.artifacts field in the task template. This field is a list of artifacts that includes the name of the artifact and the path to the artifact.

To consume an artifact, you can use the inputs.artifacts field in the task template. Like the outputs field, this field is a list of artifacts that includes the name of the artifact and the path to the artifact. If you are consuming an artifact that is an output of another task, you can reference the artifact by the name of the task that produced it along with the name of the artifact.

There are also ways to reduce the amount of data that is stored in the workflow by using artifact repositories and garbage collection.

Finally, you can get a bit more advanced control on when to output an artifact by using conditional artifacts based on expressions.

Understand Argo Workflow Templates

Workflow Templates are reusable templates that can be used to define Workflows. They are useful when you have a common set of tasks that you want to reuse across multiple workflows typically scoped to a namespace. They build on the same concepts as Workflows but are defined as a separate resource.

Check out this example of workflow templates and this example of how workflow templates can be referenced in workflow definitions.

Note how the Workflow in the sample calls the

random-fail-templatewhich is defined in the templates.yaml file.

There is also a concept of cluster scoped workflow templates with the ClusterWorkflowTemplate resource. This is useful when you want to define a template that can be used across multiple namespaces.

Understand the Argo Workflow Spec

There will be questions on the Workflow spec so be sure to read through the documentation to understand the various fields that can be used to configure the behavior of the workflow. Some of the key fields to pay attention to include:

- activeDeadlineSeconds

- arguments

- metrics

- retryStrategy

- synchronization

- templateDefaults

- workflowTemplateRef

Also, be sure to understand how to configure variables in the workflow spec and how to configure metrics which will be useful when you want to monitor the performance of the workflow and its tasks.

Finally, it is important to understand how to configure retries, retry strategy, and the retry policies that are available to you to handle task failures, because they will eventually fail 😉

Work with DAG (Directed-Acyclic Graphs)

DAG is important to understand as it allows you to define dependencies between tasks in a workflow. Tasks without dependencies (steps) are run in parallel while tasks with dependencies are run sequentially. If you have tasks that rely on the output of other tasks, you’ll want to use a DAG.

Check out this sample workflow that uses a DAG.

Pay special attention to how tasks within a DAG workflow are executed based on dependencies. You may be faced with questions on what outputs would look like based on the dependencies defined in the workflow.

Run Data Processing Jobs with Argo Workflows

Data processing jobs are common use cases for Argo Workflows. You can use Argo Workflows to process data from various sources, transform the data, and store the results.

Check out the data processing and data sourcing and transformation documentation to understand how to configure workflows to process data.

Data sources essentially point to artifact repositories and can be external like s3 buckets. The data fetched from these sources can be further transformed using expressions.

Check out this example of a workflow that processes data

Practice

To see Argo Workflows in action, follow the quick start guide to deploy a simple workflow that processes data.

Argo CD 34%

Argo CD is a declarative, continuous delivery tool for Kubernetes that enables you to implement GitOps principles. It allows you to manage the full lifecycle of applications in a Kubernetes cluster using Git repositories as the source of truth.

Understand Argo CD Fundamentals

To understand how you can best leverage Argo CD, you need to be familiar with basic concepts around containerized applications, Kubernetes, and tools commonly used to deploy and manage applications like Helm and Kustomize.

To quickly get up to speed, make sure you read through the Understanding The Basics section of the Argo CD documentation.

Here are some of the basic core concepts you should be familiar with:

- Application is the collection of Kubernetes resources that represent your application. It is represented by an Application CRD in Argo CD and commits to a Git repository.

- Application source type is the tool used to manage the application like Helm or Kustomize

- Target state is the desired state of the application that is defined in the Git repository

- Live state is the actual state of the application in the Kubernetes cluster

- Sync is the action to applying the target state to the live state

- Sync status indicates whether the target state and live state are in sync

- Sync operation status indicates the status of the last sync operation

- Refresh is the action of comparing the live state with the target state

Here is the high-level architecture of Argo CD. It too is implemented as an extension to Kubernetes following the Operator pattern.

Source: https://argo-cd.readthedocs.io/en/stable/operator-manual/architecture/

Source: https://argo-cd.readthedocs.io/en/stable/operator-manual/architecture/

One of the major components of Argo CD is the API Server which allows various external systems to interact with it like the CLI, UI, and CI/CD systems. It also takes care of ensuing communication between Git repositories and the Kubernetes cluster and acts as the security gatekeeper for the cluster.

The second major component is the Repository Server which is responsible for syncing the target state of the application with the live state in the Kubernetes cluster.

Lastly, the Application Controller is responsible for managing the lifecycle of applications in the cluster.

Synchronize Applications with Argo CD

Argo CD can keep your applications in sync automatically with the desired state defined in the Git repository. You can control how the synchronization happens using various sync options which can be configured via resource annotations or via the spec.syncPolicy.syncOptions attribute in the Application CRD. Some of the options include controlling how the sync is performed, what resources are ignored, and how to handle out-of-sync situations like pruning resources or not pruning resources, performing selective syncs, server-side apply, and more. So be sure you understand how to configure these options.

If you need to control the order in which applications are synced, you can use sync waves. This is useful when you have dependencies between applications and need to ensure that they are synced in a specific order.

If you need to control when a sync can occur, you can use sync windows to define a time window when a sync can or cannot occur. This is useful when you need to prevent a sync from happening during a maintenance window or when you need to ensure that a sync happens during a specific time.

You can also control what resources get synced by using a selective sync or apply special annotations to resources to ignore them.

Lastly, synchronization can be controlled using resource hooks which gives you the flexibility to run custom logic at various stages of the synchronization process like pre-sync, post-sync, pre-sync failure, and post-sync failure.

Knowing when to hook into the synchronization process is important so be sure to read through the documentation to understand how to use resource hooks.

Use Argo CD Application

Before we get into the Argo CD Application, you should know that Argo Projects are used to group applications and manage access control. This is useful when you need to manage multiple applications and control who has access to them. Argo CD comes with a default project called default and most beginners will deploy applications to this project. However, as your team grows and you have more applications, you may want to create additional projects to manage access control and restrict where applications can be deployed from, what applications can be deployed, and what clusters and namespaces they can be deployed to.

Check out this example of how to define a project and the various fields that can be configured.

You can also work with Projects and most other Argo CD resources using the argocd CLI or the Argo CD UI.

The Application resource is the primary resource you will interact with to define your applications that Argo CD will manage. Two key attributes to pay attention to include:

- source is the source of the application that points to the Git repository

- destination is the destination of the application that points to the Kubernetes cluster

Check out this example of an application to see how it is defined and the various fields that can be configured and this list of annotations and labels that can be used to control how the application is managed.

If you need to pass parameters to your application, you can use parameters to define them in the application spec. This is useful when you need to pass configuration values to your application that are stored in the Git repository.

Typically your application will be sourced from a single repo; however, you can also source your application from multiple sources.

Here is a sample of how to define multiple sources in an application.

If you are managing applications that needs to be deployed across multiple clusters, you can use the ApplicationSet resource which is managed by the ApplicationSet controller. This feature extends the capabilities of the Application resource and focuses on cluster administration scenarios including multi-cluster application management, multi-tenancy, and more.

Prior to Argo CD v2.3, this did require a separate installation but now it is included in the main Argo CD installation.

The ApplicationSet resource gives you powerful tools to template your applications using the Templates field and the ability to use Go Templates to generate the unique values for fields.

It is also worth noting that you should be a bit familiar with the Generators that are available to you when using the ApplicationSet resource. This includes the List, Git, Helm, and Kustomize generators that can be used to generate the resources that will be deployed to the cluster.

In some advanced scenarios like cluster bootstrapping, you may need to use an App of Apps pattern that can be used to nest applications within a parent application. This is useful when you need to create applications that in turn create other applications.

Prior to Argo CD v2.5, applications were managed out of the

argocdnamespace. Since then Argo CD administrators now have the ability to define namespaces where applications can be deployed to. To enable this app in any namespace feature checkout the namespace-based configuration documentation to see which flags must be set.

Deleting an application is a common task that you will need to perform. When you delete an application, you can use either the argocd CLI or kubectl and with both, you have control on whether or not you want cascading deletion of resources. This is useful when you need to control how resources are deleted when an application is deleted. Also be sure to understand how the deletion finalizer can be used with the App of Apps pattern.

Configure Argo CD with Helm and Kustomize

In the section above, you saw how Application resources can be sourced from Helm and Kustomize.

When using Helm to define your application it is important to know that Argo CD inflates the Helm chart and stores the resources in the Kubernetes cluster. So don’t expect to see the Helm installation using the typical Helm commands. Be sure to take a look though the Helm guide to understand how to pass in parameter values, configure hooks, and even install custom Helm plugins.

For Kustomize based applications, be sure to read through the Kustomize guide to understand how to configure your application using patches, components, and build options.

Argo CD supports multiple versions of kustomize. See this sample on how to set the version of kustomize to use but note that you must also have the kustomize versions set in the ConfigMap.

Lastly, you can use kustomize to customize a Helm chart deployment by enabling the --enable-helm flag. This is useful when you need to customize a Helm chart deployment using kustomize patches.

Identify Common Reconciliation Patterns

Argo CD uses a reconciliation loop to ensure that the target state of the application matches the live state in the Kubernetes cluster. This loop is responsible for detecting changes in the target state, comparing it with the live state, and taking the necessary actions to ensure that the target state is applied to the live state.

Be sure to read through the reconciliation optimization documentation to understand how to optimize the reconciliation process and improve the performance of Argo CD.

You can also configure rate limits on application reconciliations to control the rate at which applications are reconciled and configure retry and backoff strategies. This is useful when you need to prevent the reconciliation loop from consuming too many resources.

Practice

To see Argo CD in action, follow the quick start guide to deploy a simple application and manage its lifecycle.

Argo Rollouts 18%

Argo Rollouts is a tool that enables you to manage and automate the deployment of applications on Kubernetes. It takes the concept of Kubernetes Deployment to the next level by providing advanced deployment strategies and features that help you achieve more controlled and reliable application updates.

Like all the other Argo projects it is implemented as a Kubernetes controller with its own set of CRDs that provide advanced deployment capabilities such as blue-green deployments, canary deployments, and progressive delivery.

Understand Argo Rollouts Fundamentals

In the context of software development, Continuous Integration (CI), Continuous Delivery (CD), and Progressive Delivery (PD) are three key practices that help teams deliver software more efficiently and reliably.

- CI is the process of integrating code changes in a shared repository and making sure it can be built and tested with the intent of detecting issues early.

- CD is the ability to deploy apps to production, reliably, confidently, and on demand.

- PD is the evolution of CD that focuses on the gradual and controlled delivery of new features to users; which ultimately reduces the risk of deploying new features. In addition to gradual delivery, PD also gives you the ability to implement feature flags, A/B testing, and phased rollouts

Argo Rollouts enables PD by providing a new controller called the Argo Rollouts Controller to manage the lifecycle of Pods and ReplicaSets.

Source: https://argoproj.github.io/argo-rollouts/architecture/

Source: https://argoproj.github.io/argo-rollouts/architecture/

The Rollout resource is the primary resource that you will interact with. This can be a drop in replacement for the Deployment resource or used alongside it.

The ReplicaSet is the same as the one used by the Deployment controller. The difference is that the Rollout controller will manage the ReplicaSet and its Pods.

Argo Rollouts also has the ability to integrate with Ingress, Service and/or service meshes to manage traffic routing and direct traffic to the new versions of an application.

The traffic can be managed manually using the UI or CLI or automatically using the Analysis feature. This feature allows you to integrate with various metrics providers to monitor and analyze the performance of the new version of an application. If the metrics do not meet the defined thresholds, the Rollout controller will automatically rollback to the previous version.

Use Common Progressive Rollout Strategies

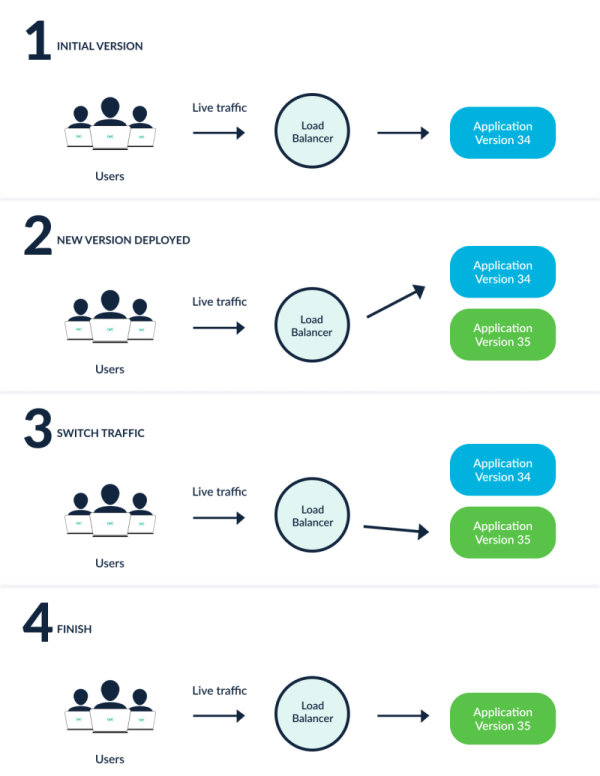

The most commonly used progressive rollout strategies within Argo Rollouts Blue-Green and Canary.

With Blue-Green, both the old and new versions of an application are deployed simultaneously. Once testing is complete, traffic is switched to the new version and the old version is deleted.

Source: https://argoproj.github.io/argo-rollouts/concepts/#blue-green

Source: https://argoproj.github.io/argo-rollouts/concepts/#blue-green

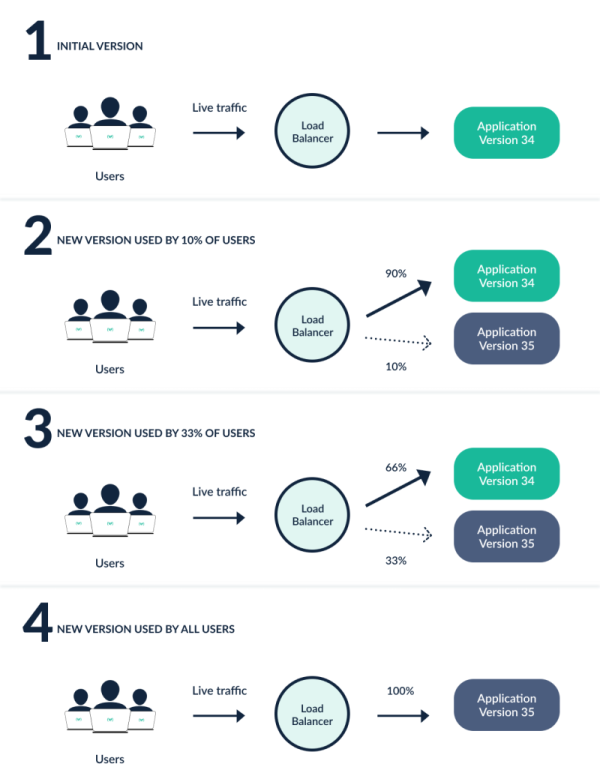

With Canary, a new version of an application is deployed alongside the old version. Only a subset of users will be directed to the new version and monitored for any issues. If no issues are detected, the new version will be gradually rolled out to all users by increasing the percentage of traffic directed to the new version.

Source: https://argoproj.github.io/argo-rollouts/concepts/#canary

Source: https://argoproj.github.io/argo-rollouts/concepts/#canary

The strategy you choose will depend on your use case and requirements. Be sure to read through this comparison to understand the differences.

One important thing to consider when using Argo Rollouts is pod placement on nodes which can trigger unwanted disruptions as node autoscalers look to optimize and scale-down underutilized nodes. To prevent this, you can use PodAntiAffinity to ensure that new versions of pods are not scheduled on the same node as old versions.

Describe Analysis Template and Analysis Run

As mentioned above, Argo Rollouts can automatically manage traffic routing using the Analysis feature. To do this, you will need to define AnalysisTemplate and AnalysisRun resources.

The AnalysisTemplate resource defines the metrics and frequency that will be used to monitor the new version of an application. It will also include success and failure thresholds that will be used to determine whether the new version is performing as expected.

The AnalysisRun resource defines the actual analysis that will be performed. Upon completion, it will return a status of Successful, Failed, or inconclusive and the Rollout controller will use this information to determine whether to continue with the rollout or rollback to the previous version.

Think of AnalysisTemplate as a template and AnalysisRun as an instance of that template.

There is quite a bit to learn about this feature when it comes to resource specification, so be sure to read through the Analysis & Progressive Delivery documentation as well as its various metric provider integrations to understand how to define and use the resources.

Practice

To see Argo Rollouts in action, install the controller and kubectl plugin then follow the quick start guide to deploy a simple application and manage its lifecycle.

Argo Events 12%

Argo Events enables you to implement event-driven workflow automation at scale within Kubernetes clusters.

Understand Argo Events Fundamentals

Event-Driven Architecture (EDA) is the foundation of modern software development. It allows for the creation of highly responsive and adaptable systems that can react to real-time events and changes in the environment.

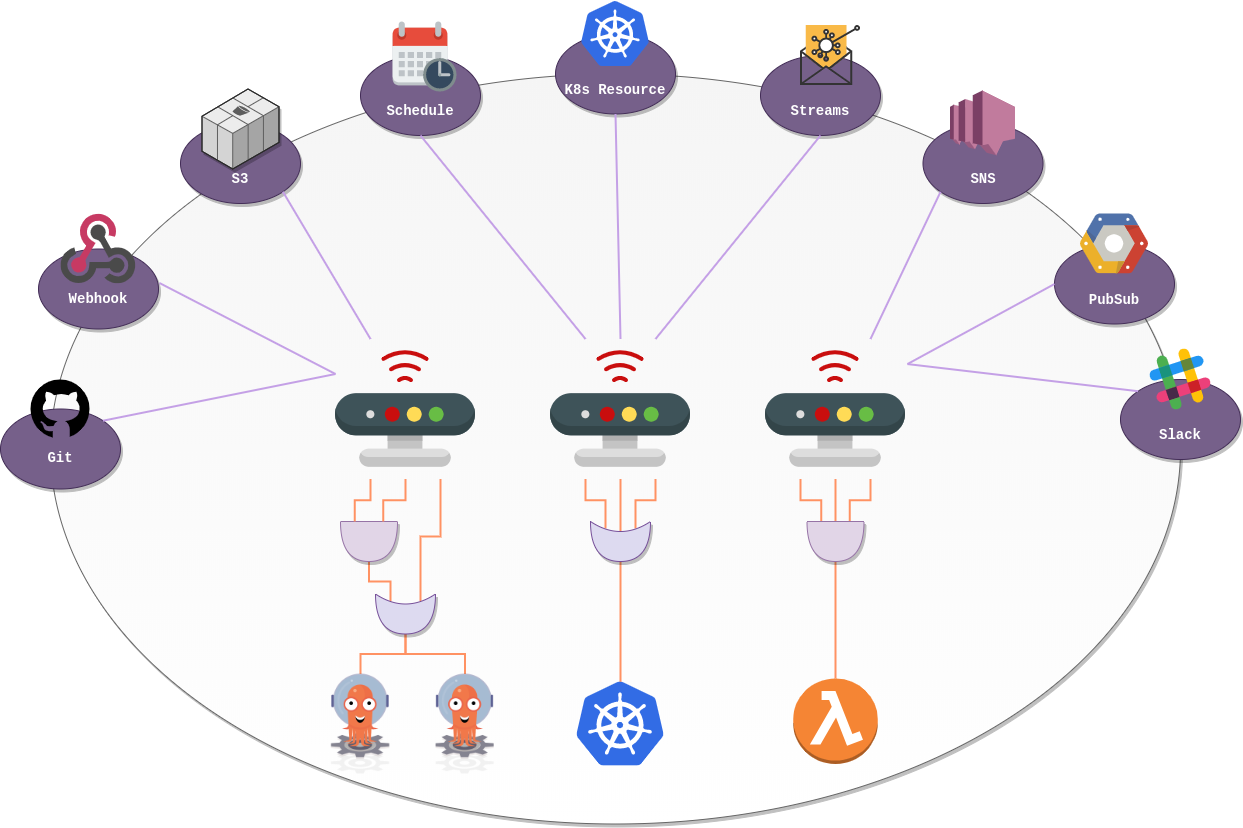

Below is an overview of Argo Events:

Source: https://argoproj.github.io/argo-events

Source: https://argoproj.github.io/argo-events

With Argo Events, you can build event-driven workflows that respond to a wide range of events, such as messages in a queue, changes in a database, or even webhook notifications from external services. Currently Argo Events supports over 20 event sources and can trigger actions in response to those events including creation of Kubernetes resources, triggering of workflows, and more.

Understand Argo Event Components and Architecture

Understanding the architecture of Argo Events is essential for grasping how it operates and how its components interact with each other.

Source: https://argoproj.github.io/argo-events/concepts/architecture/

Let’s quickly go over the components:

- Event Source is the external system that generates events.

- Sensor listens to event sources and triggers actions to respond to those events.

- EventBus is backbone for managing delivery of events from event sources to sensors

- Trigger responds to events by performing actions such as starting workflows, creating Kubernetes resources, or sending notifications.

For Webhook event sources, you can use a simple Kubernetes Secret to store the authentication token to protect the webhook endpoint.

You can also further validate the event data that is received by the event source using filters and expressions. This is useful when you need to ensure that the event data meets certain criteria before triggering an action.

Practice

To see Argo Events in action, follow the quick start guide to deploy a simple event-driven workflow.

Summary

That was a lot of information to digest but I hope it gives you a good starting point to prepare for the CAPA exam. Be sure to read through the official documentation for each of the projects and try out some of the examples to get a better understanding of how they work.

In addition to all the URLs provided above, be sure to check out the awesome-argo site maintained by friends at Akuity for a ton of great resources on the Argo projects.

If this guide was helpful, please share it with your friends and colleagues. If you have any questions or feedback, feel free to leave a comment below or reach out on LinkedIn, BlueSky, or X.

Good luck with your exam and happy learning! 🚀