Does Workload Identity on AKS work across tenants?

Introduction

An interesting use case for Workload Identity came up recently. I was asked if a Pod in an AKS cluster that was deployed in one tenant can access Azure resources within another tenant.

I’ve configured Workload Identity on AKS many times, and I thought “in theory” it should “just work”, but I never tested it across tenants. So I decided to give it a try.

TL;DR: Yes, it does work.

What is Workload Identity?

If you are not familiar with Azure AD Workload Identity, it is a capability that allows an Azure identity to be assigned to an application workload which can then be used to authenticate and access services and resources protected by Azure AD

I know Azure AD has been renamed to Microsoft Entra ID but I’ll keep referring to it as Azure AD here 😉

In Kubernetes, this identity is assigned to a ServiceAccount and Pods that use the ServiceAccount can access to Azure resources without having to manage credentials.

If you’ve heard of or worked with Azure AD Pod Identity, this is the improved version and replacement for that. So, you should migrate to Workload Identity as soon as possible.

How does Workload Identity work?

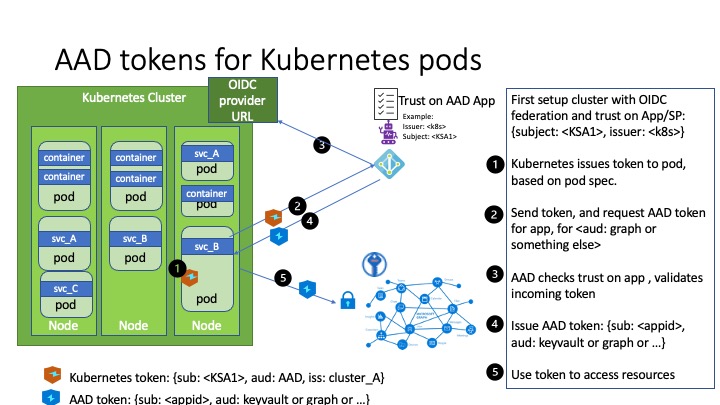

At a high-level, Azure AD federates with a Kubernetes cluster to establish trust, and Workload Identity uses OpenID Connect (OIDC) to authenticate with Azure AD.

The Kubernetes cluster needs to be configured to use ServiceAccount token volume projection which places a ServiceAccount token into a Pod. This token is sent to Azure AD where it is validated for authenticity and exchanged for an Azure AD bearer token. From there, the Pod uses the Azure AD token to access Azure resources.

How does Azure AD know where to validate this token, you ask? It validates the token against the OIDC endpoint that you tell it to when establishing the trust between Azure AD and your Kubernetes cluster.

With OIDC, the tenant that the AKS cluster resides in doesn’t really matter. As long as the OIDC endpoint of the Kubernetes cluster is accessible by Azure AD, the trust can be established

The token exchange is handled within your app using the Azure Identity SDK or the Microsoft Authentication Library (MSAL). So there is a bit of application code that needs to be written to make this work. But the benefit is that you don’t have to manage credentials for Azure resources 🔒

The diagram depicted here gives a good visual representation of the process.

Cool thing about workload identity is that it works with any Kubernetes cluster; not just AKS, since workload identity leverages native capabilities of Kubernetes. It’s just a little easier to configure on AKS since Azure handles the configuration on the control plane for you 😎

Let’s take a look at how you’d setup and use workload identity on AKS.

Cross-tenant Workload Identity on AKS

I’ll be demonstrating this using a simple Go app that reads Azure subscription resource quota information. The Go app will run in a Pod in an AKS cluster and will use Workload Identity to access Azure subscription info in another tenant.

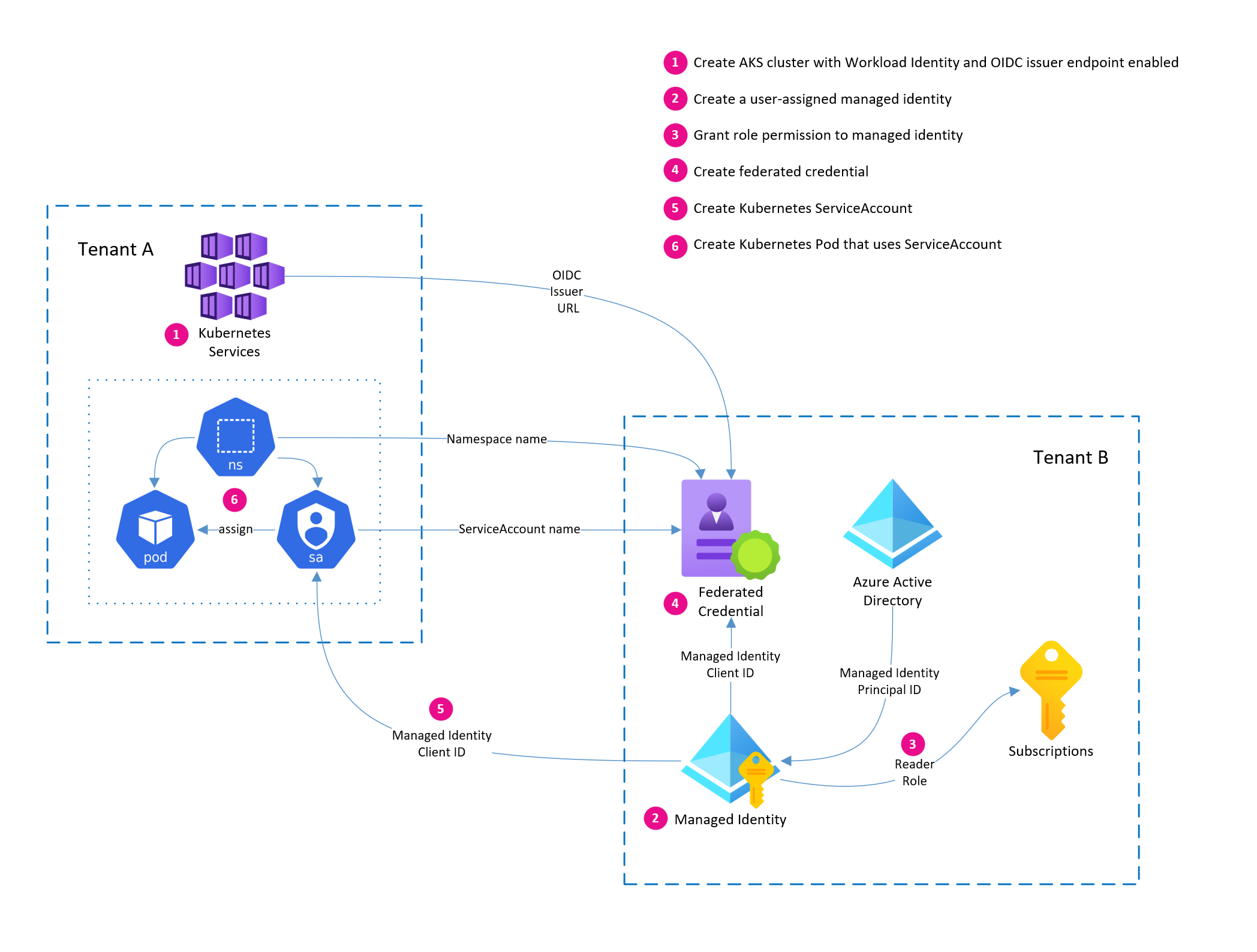

Here is a diagram that illustrates the configuration process.

Pre-requisites

In order do run through this demonstration, you will need the following:

- Two Azure subscriptions each in a separate tenant (I’ll call them Tenant A and Tenant B for simplicity)

- Azure CLI

- Go

- ko: Easy Go Containers

- VS Code

Configure Azure resources in Tenant A

In Tenant A, we will create an AKS cluster with Workload Identity enabled. This cluster will be used to deploy an app that will attempt to access Azure resources in Tenant B.

Create the AKS cluster with Workload Identity enabled

Login to your subscription in Tenant A.

az login

Create the AKS cluster with Workload Identity and OIDC issuer features enabled.

az group create --name rg-wi-demo --location eastus

az aks create --name aks-wi-demo --resource-group rg-wi-demo --enable-oidc-issuer --enable-workload-identity

Azure AD will use the OIDC issuer endpoint to discover public signing keys and verify the authenticity of the service account token before exchanging it for an Azure AD token.

az aks show --name aks-wi-demo --resource-group rg-wi-demo --query "oidcIssuerProfile.issuerUrl" -o tsv

⚠️ IMPORTANT This OIDC issuer URL is very important to the process so make a note of it.

That’s all that is needed in Tenant A for now.

Configure Azure resources in Tenant B

In Tenant B, we need to create the managed identity, assign it permissions to read subscription information, and establish the trust between the managed identity and the AKS cluster in Tenant A.

Create the managed identity and assign permissions

Login to your subscription in Tenant B.

az login

Create a user-assigned managed identity and retrieve the client ID.

az group create --name rg-wi-demo --location eastus

az identity create --name mi-wi-demo --resource-group rg-wi-demo --query clientId -o tsv

⚠️ IMPORTANT The

clientIdof the managed identity will be needed later, so make a note of it too.

Next, retrieve the principal ID of the managed identity.

principalId=$(az identity show -n mi-wi-demo -g rg-wi-demo --query principalId -o tsv)

Grant the newly created managed identity permissions to read subscription information.

subscriptionId=$(az account show --query "id" -o tsv)

az role assignment create --role Reader --assignee $principalId --scope /subscriptions/${subscriptionId}

Establish trust between AKS cluster in Tenant A and managed identity in Tenant B

Now we need to create the federated identity credential to establish trust between the AKS cluster in Tenant A and Azure managed Identity in Tenant B. We will use the OIDC issuer URL from the AKS cluster and the name of the managed identity to link the two.

The other bit of important information here is the value of subject in the federated credential. Here we are using a value of system:serviceaccount:default:wi-demo-account. This is the name of the Kubernetes ServiceAccount that we will create later in Tenant A.

When authentication requests are made from our application pod, this value will be sent to Azure AD as the subject in the auth request, and Azure AD will determine eligibility if this value matches what we set when establishing trust. So it is important here that the Namespace and ServiceAccount names match what we will use later.

Place your OIDC issuer URL in the oidcIssuerUrl variable below and run the following command to create the federated credential (establishing trust).

oidcIssuerUrl=<YOUR_OIDC_ISSUER_URL>

az identity federated-credential create \

--name fc-wi-demo \

--identity-name mi-wi-demo \

--resource-group rg-wi-demo \

--issuer $oidcIssuerUrl \

--subject system:serviceaccount:default:wi-demo-account

Create the app to read subscription information

Now that we have all of the Azure resources configured, we can create a simple app that will attempt to read subscription information.

Initialize the Go project

# putting your go app in GOPATH is optional

cd $(go env GOPATH)

# create a new directory for the app

mkdir wi-demo

cd wi-demo

# initialize the go project

go mod init example.com/wi-demo

# install the Azure SDK for Go

go get github.com/Azure/azure-sdk-for-go/sdk/azidentity

go get github.com/Azure/azure-sdk-for-go/sdk/resourcemanager/quota/armquota

# create the main.go file

touch main.go

Add code to the main.go file

Open the directory in VSCode, then open main.go and add the following code.

Shout out to the Azure SDK for Go developers for putting together great docs with examples 🥳 I was able to lift code from here and here for this sample app.

package main

import (

"context"

"log"

"os"

"github.com/Azure/azure-sdk-for-go/sdk/azidentity"

"github.com/Azure/azure-sdk-for-go/sdk/resourcemanager/quota/armquota"

)

func main() {

subscriptionID := os.Getenv("AZURE_SUBSCRIPTION_ID")

region := os.Getenv("AZURE_REGION")

resourceName := os.Getenv("AZURE_RESOURCE_NAME")

cred, err := azidentity.NewDefaultAzureCredential(nil)

if err != nil {

log.Fatalf("failed to obtain a credential: %v", err)

}

ctx := context.Background()

clientFactory, err := armquota.NewClientFactory(cred, nil)

if err != nil {

log.Fatalf("failed to create client: %v", err)

}

clientResponse, err := clientFactory.NewClient().Get(ctx, resourceName, "subscriptions/"+subscriptionID+"/providers/Microsoft.Compute/locations/"+region, nil)

if err != nil {

log.Fatalf("failed to finish the request: %v", err)

}

clientJson, _ := clientResponse.CurrentQuotaLimitBase.MarshalJSON()

log.Printf("limit: %s", clientJson)

usagesClientResponse, err := clientFactory.NewUsagesClient().Get(ctx, resourceName, "subscriptions/"+subscriptionID+"/providers/Microsoft.Compute/locations/"+region, nil)

if err != nil {

log.Fatalf("failed to finish the request: %v", err)

}

usagesJson, _ := usagesClientResponse.CurrentUsagesBase.MarshalJSON()

log.Printf("usage: %s", usagesJson)

}

The application will attempt to read the resource quota information using the armquota package and print the results to the console.

It will look for the following environment variables at runtime:

AZURE_SUBSCRIPTION_ID: The subscription ID of the subscription in Tenant BAZURE_REGION: The Azure region where resources are deployedAZURE_RESOURCE_NAME: The name of the resource to read quota information for

If you are unsure of the values to use for AZURE_RESOURCE_NAME you can run the command az vm list-usage --location eastus to get a list of available resources.

Testing the app locally

Before we attempt to deploy the app to the AKS cluster, let’s test it locally to make sure it works.

Log into any Azure tenant as we will just use Azure CLI credentials for local testing.

az login

Since our app will be reading subscription quota information using the Azure Quota API, we’ll need to ensure the Microsoft.Quota provider is registered in the subscription.

First, check to see if the provider is registered.

az provider show --namespace Microsoft.Quota --query "registrationState"

If the registration state is NotRegistered, register the provider.

az provider register --namespace Microsoft.Quota

Wait until the registration state is Registered before continuing.

Once the provider is registered, we can run the app locally.

export AZURE_SUBSCRIPTION_ID=$(az account show --query "id" -o tsv)

export AZURE_REGION="eastus"

export AZURE_RESOURCE_NAME="cores"

go run main.go

If all went well, the app will run to completion and you should see output with JSON printed to the console.

Deploy the app to AKS

Once we confirm the app works locally, it’s time to push it to a container registry so that it can be pulled from within the AKS cluster.

Publish the container to a container registry

I like using ko to build and publish Go apps to a container registry. It’s super easy to use and doesn’t require Docker to be installed on your machine.

I also like to use ttl.sh for quick container testing. It’s a free service that allows you to push a container to a registry and have it available for a specified period of time by using tags (e.g., 1h for 1 hour and 1m for 1 minute). Being able to use ephemeral containers is perfect for quick testing and demos.

export KO_DOCKER_REPO=ttl.sh

ko build . --tags=1h

You should see something like this in the third line of the output. This is your image name:

Publishing ttl.sh/wi-demo-09748ea200b8b1398c659943f01b0121:5m

⚠️ IMPORTANT

kowill generate an image name for you with the tag you specify, so make a note of it as we will use it later.

Deploy the app to AKS

In Tenant A, log into the AKS cluster.

az aks get-credentials --name aks-wi-demo --resource-group rg-wi-demo

Using the clientId of the user-assigned managed identity, create a new ServiceAccount.

kubectl apply -f - <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

annotations:

azure.workload.identity/client-id: <YOUR_USER_ASSIGNED_MANAGED_IDENTITY_CLIENT_ID>

name: wi-demo-account

namespace: default

EOF

Notice the azure.workload.identity/client-id annotation on the ServiceAccount. This is what tells the AKS cluster which managed identity to use for Workload Identity.

Using the image name, and Tenant B’s tenant and subscription IDs, create a new Pod.

kubectl apply -f - <<EOF

apiVersion: v1

kind: Pod

metadata:

name: wi-demo-app

namespace: default

labels:

azure.workload.identity/use: "true"

spec:

serviceAccountName: wi-demo-account

containers:

- name: wi-demo-app

image: <YOUR_IMAGE_NAME>

env:

- name: AZURE_TENANT_ID

value: <TENANT_B_ID>

- name: AZURE_SUBSCRIPTION_ID

value: <TENANT_B_SUBSCRIPTION_ID>

- name: AZURE_REGION

value: eastus

- name: AZURE_RESOURCE_NAME

value: cores

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

EOF

Notice the azure.workload.identity/use label and the serviceAccountName: wi-demo-account in the Pod spec. This is what tells the AKS cluster to use Workload Identity for this Pod.

⚠️ IMPORTANT The

AZURE_TENANT_IDenvironment variable is used by the Azure Identity SDK to authenticate with Azure AD. If this was not set, the SDK would attempt to authenticate with the tenant that the AKS cluster is in. Since we need the app to authenticate to Tenant B, we need to explicitly set this environment variable.

With the Pod running, we can check the logs to see if the app was able to read subscription quota information.

kubectl logs wi-demo-app

You should see output similar to this:

2023/08/24 17:48:03 clientResponse: {"id":"/subscriptions/00000000-0000-0000-0000-000000000000/providers/Microsoft.Compute/locations/eastus/providers/Microsoft.Quota/quotas/cores","name":"cores","properties":{"isQuotaApplicable":true,"limit":{"limitObjectType":"LimitValue","limitType":"Independent","value":20},"name":{"localizedValue":"Total Regional vCPUs","value":"cores"},"properties":{},"unit":"Count"},"type":"Microsoft.Quota/Quotas"}

2023/08/24 17:48:04 usagesClientResponse: {"id":"/subscriptions/00000000-0000-0000-0000-000000000000/providers/Microsoft.Compute/locations/eastus/providers/Microsoft.Quota/usages/cores","name":"cores","properties":{"isQuotaApplicable":true,"name":{"localizedValue":"Total Regional vCPUs","value":"cores"},"properties":{},"unit":"Count","usages":{}},"type":"Microsoft.Quota/Usages"}

If you see output similar to this, then you have successfully used Workload Identity to access Azure resources in another tenant 🎉

Conclusion

In this article, we explored using Workload Identity on AKS to access Azure resources in another tenant. I just assumed it would work, but it was nice to see it in action. The steps to make it cross tenant is not really anything special. The OIDC issuer URL is the key piece of information that is needed to establish trust between the AKS cluster and the managed identity regardless of which tenant they are in.

I’ll admit the process to configure workload identity in general is a bit involved, but as long as you have a good understanding of the moving parts, it’s not too bad.

The Azure Workload Identity team also created a command line utility called azwi which may help automate some of the steps so be sure the check that out.

The sample app I demonstrated here is just one use case where you can use Workload Identity to read subscription information. This concept can be applied to any Azure resource that is protected by Azure AD.

So, if you need to access Azure resources from a workload running in Kubernetes and have the flexibility to modify your application code (using Azure Identity SDK), I highly recommend you use Workload Identity.

If you have any feedback or suggestions, please feel free to reach out to me on Twitter or LinkedIn.

Peace ✌️

Resources

- What are workload identities?

- What are managed identities for Azure resources?

- Azure services that can use managed identities to access other services

- Use Azure AD workload identity with Azure Kubernetes Service (AKS)

- Tutorial: Use a workload identity with an application on Azure Kubernetes Service (AKS)

- Azure AD Workload Identity (GitHub)