Soaring to New Heights with Kaito: The Kubernetes AI Toolchain Operator

Earlier today at KubeCon Europe 2024, Jorge Palma of the AKS team gave a keynote talk on Kaito, the Kubernetes AI Toolchain Operator.

Next keynote speaker @jorgefpalma 🙌 we are truly living in the AI revolution pic.twitter.com/ATxLWUKDeD

— CNCF (@CloudNativeFdn) March 20, 2024

This tool has been released as an open-source project a few months back and you may or may not have heard of it.

So if you don’t know, now you know…

You’re probably thinking, “but what is it, and what can it do for me?” 🤔

What’s in a name?

Kaito is a cool name and the word rolls off the tongue quite nicely. After doing a bit of searching I came across a definition of the name Kaito, which is a Japanese name commonly given for boys and has deep meaning and symbolism within the Japanese culture.

According to Japanese Board, the name is made up of two kanji characters: 海 and 斗

- 海 (kai) means “sea” or “ocean”

- 斗 (to) has several meanings and references like a “measuring cup” or “big dipper” used in Chinese astrology

Ultimately the word symbolizes guidance and direction, and aligning oneself with the stars over the vastness of the sea. This is a fitting name for a tool that aims to help you navigate through what could be a sea of complexity that is, AI and Kubernetes 😅

What is Kaito?

Okay, enough with the philosophical stuff. Let’s get to the meat of it.

Kaito is a Kubernetes Operator that aims to help you with your AI workloads on Kubernetes. It’s a tool that in it’s current form, helps you reduce your time to inference when it comes to deploying open-source AI models with inferencing endpoints on Kubernetes.

Why use Kaito?

If you’re working with AI today, especially OpenAI or Azure OpenAI, you’re probably familiar with the fact the model is hosted in the cloud and you primarily interact with it via REST API. The Model-as-a-Service (MaaS) approach is great for a lot of use cases, but there are times when you need to run the model closer to the data due to a variety of reasons like data sovereignty, compliance, or latency.

Also, you might not want to run OpenAI’s models because they are not truly open-source and may feel like a black box when it comes to understanding how they were built and how they work. You might want to run your own models, or models from the open-source community, many of which can be found on Hugging Face’s model hub.

Kaito aims to help you with this by providing a way to deploy open-source AI models as inferencing services on Kubernetes. It cuts through all the minutiae of deploying, scaling, and managing AI and GPU-based workloads on Kubernetes.

More specifically it helps you with the following:

- Automated GPU node provisioning and configuration Kaito will automatically provision and configure GPU nodes for you. This can help reduce the operational burden of managing GPU nodes, configuring them for Kubernetes, and tuning model deployment parameters to fit GPU profiles.

- Reduced cost: Kaito can help you save money by splitting inferencing across lower end GPU nodes which may also be more readily available and cost less than high-end GPU nodes.

- Support for popular open-source LLMs: Kaito offers preset configurations for popular open-source LLMs. This can help you deploy and manage open-source LLMs on AKS and integrate them with your intelligent applications.

- Fine-grained control: You can have full control over data security and privacy, model development and configuration transparency, and the ability to fine-tune the model to fit your specific use case.

- Network and data security: You can ensure these models are ring-fenced within your organization’s network and/or ensure the data never leaves the Kubernetes cluster.

Kaito Architecture

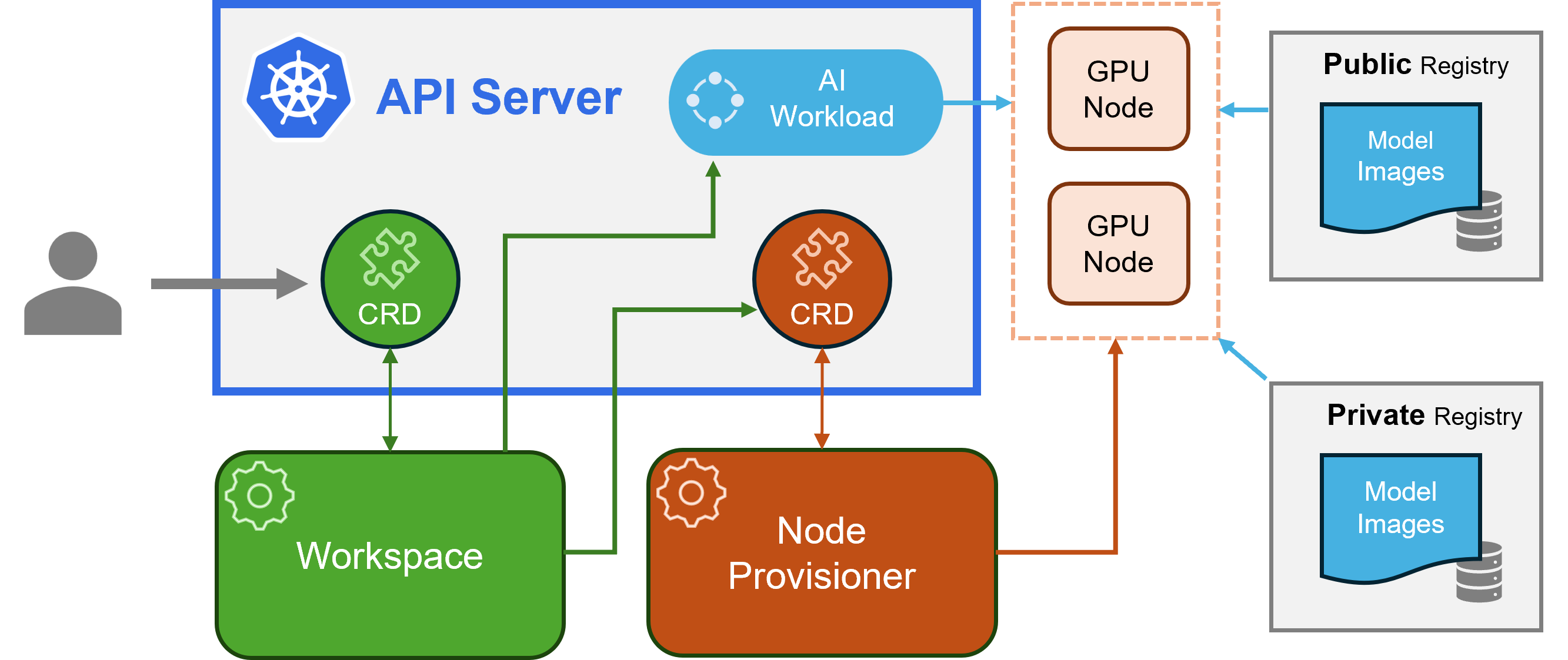

Kaito is built using the Kubernetes controller design pattern. Here is a high-level overview of the architecture of Kaito which can be found in the Kaito GitHub repo.

The operator that consists of two controllers.

- Workspace controller: This controller reconciles the Workspace custom resource which creates a machine custom resource to trigger node auto provisioning and creates the inference workload.

- Node provisioner controller: This controller uses the machine custom resource to interact with the Workspace controller. It leverages Karpenter APIs to add new GPU nodes to the AKS cluster.

As a cluster operator, you only need to be concerned with a couple of helm install commands to install the controllers and a Workspace custom resource. The Workspace controller will reconcile the Workspace custom resource which creates a machine custom resource to trigger node auto provisioning and creates the inference workload based on a preset configuration.

The Kaito team has done quite a bit of work to ensure some of the most popular open-source models like Meta’s Llama2, TII’s Falcon, Mistral AI’s Mistral, Microsoft’s Phi-2, and others are containerized properly and work well on Kubernetes. So they’ve created what they call presets for these models. This means you can deploy these models with a few lines of YAML and Kaito will take care of the rest.

Here is an example of a Workspace custom resource:

apiVersion: kaito.sh/v1alpha1

kind: Workspace

metadata:

name: workspace-falcon-7b-instruct

resource:

instanceType: "Standard_NC12s_v3"

labelSelector:

matchLabels:

apps: falcon-7b-instruct

inference:

preset:

name: "falcon-7b-instruct"

Only 12 lines of YAML 🎉

If you’re wondering if this works on different cloud providers, unfortunately the answer right now is “no”. Kaito is currently only supported on Azure. But with this being an open-source project, you are welcome to contribute and help make it work on other cloud providers 🤝

Getting Started

Getting started with Kaito is pretty straightforward. You can visit the Kaito GitHub repo and follow the installation instructions to start.

I’ve also recorded a “Learn Live” session on @MicrosoftReactor YouTube channel with Ishaan Seghal from the Kaito team where we did an in-depth walk-through on how to get started. Be sure to check that video out below.

The workshop material for the session can be found here: Open Source Models on AKS with Kaito

As with any big event like KubeCon comes a slew of announcements. One of the announcements was the release of the Kaito add-on for AKS (currently in Preview) which simplifies this even more by having AKS install and manage Kaito in your AKS cluster for you. Documentation for that can be found here: Deploy an AI model on Azure Kubernetes Service (AKS) with the AI toolchain operator (preview)

Lastly, there is a neat blog article which walks you through some of the steps listed above but includes an interesting use case of using Kaito for forecasting energy usage using the LLaMA2 model. Be sure to check that out as well. 2.1 Forecasting Energy Usage with Intelligent Apps Part 1: Laying the Groundwork with AKS, KAITO, and LLaMA

More Presets

The Kaito team publishes all the models they support as presets here and is working on adding more for popular open-source AI models. If you have a model you’d like to see supported, you can open an issue on the Kaito GitHub repo and let the team know. They are always looking for feedback and contributions from the community.

Conclusion

By now, you should have a good understanding of what Kaito is and what it aims to help you with as a Kubernetes Operator. Being able to deploy open-source AI models as inferencing services on Kubernetes will be a game-changer for a lot of folks. But inferencing is just one part of the story and probably and that’s where this Kaito journey begins. The team is also looking at how to help you with fine-tuning these open-source AI models on Kubernetes in the future, so stay tuned for that.

So leverage Kaito for your AI workloads in Kubernetes. The name says it all.

If you have any questions or feedback, please feel free to leave a comment below or reach out to me on Twitter or LinkedIn. Also be sure to follow the @TheAKSCommunity YouTube channel for more on this project as it evolves.

Until next time,

Peace ✌️