Service Mesh Considerations

“Build microservices”, they said… “it’ll be fun”, they said…

There are many reasons why you would want to deploy a solution based on the microservices architectural pattern, but it comes at a cost. More microservices means more deployments to manage, more microservices to connect, more microservices to secure… yeah, it gets complex real quick.

If you’re just getting started with microservices or have a small number of microservices deployed, you may have heard of the term “service mesh”, but not needed one yet.

In this article, I will describe what a service mesh is, the problems it can solve, and help you reason through when you should (or shouldn’t) implement one.

What is a service mesh?

The concept of a service mesh is not new. It’s been around since 2016 but let’s start by reviewing the definition of it… a service mesh is a configurable layer of infrastructure that provides a way to establish and secure communications between microservices. It operates at L7 within the OSI model and handles many cross-cutting concerns that both application developers and cluster operators will appreciate including service discovery, security, resiliency, and enhance overall observability.

To me, the best part is that it can do it all without requiring the application developer to write code and this is made possible by the architectural pattern that is common in many service mesh implementations.

Basic architecture of a service mesh

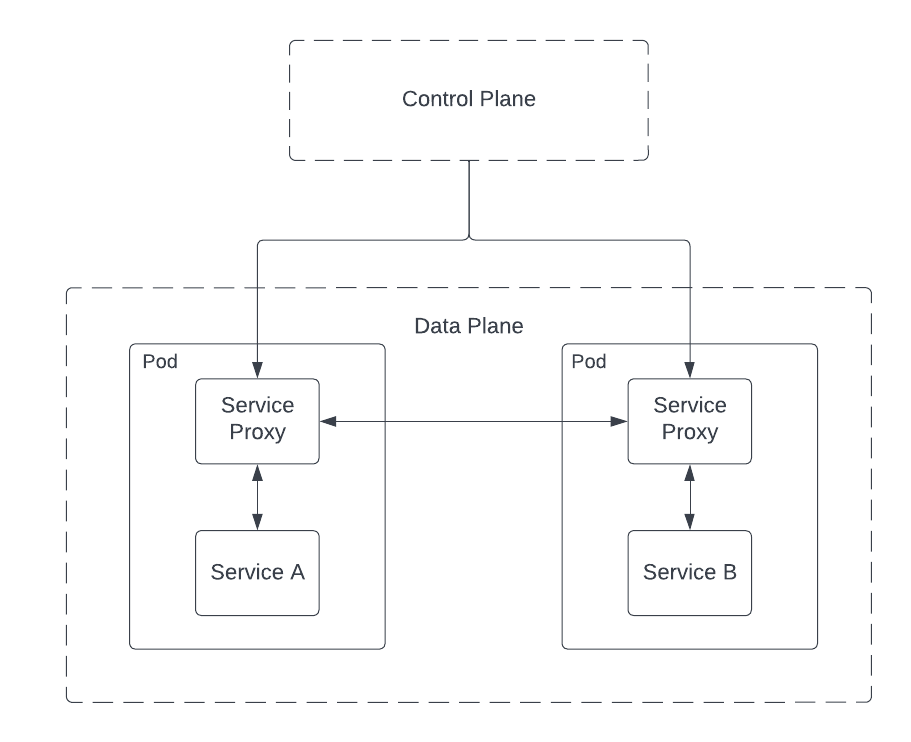

It is very common that a service mesh deploys a control plane and a data plane. The control plane does what you might expect; it controls the service mesh and gives you the ability to interact with it. Many service meshes implement the Service Mesh Interface (SMI) which is an API specification to standardize the way cluster operators interact with and implement features.

Keep an eye on the Gateway API GAMMA Initiative as it is currently evolving with the goal of streamlining how services meshes can implement the Gateway API and reduce some overlap.

With this architecture, cluster operators apply configuration to the control plane and the control plane sends the configuration to data plane components.

The data plane is often implemented by automatically injecting a service proxy into a pod using the sidecar pattern. More often then not, you’ll find Envoy Proxy as part of the implementation. The proxy container sits next to a service container in a pod and the two containers communicate over the local loopback interface.

As you can see in the diagram above, microservices don’t talk to each other directly. Traffic flows through the intermediary service proxy and it’ll do all the heavy lifting so you don’t need modify your application code to get started.

What problems does a service mesh help you solve?

Now that we know what a service mesh is and understand its high-level architecture, let’s dig a little bit deeper into problems it will help you solve.

Service discovery

Services need to know where other services are on the network. Calling a service by its name is much easier than by IP and a service mesh will help you achieve that through service discovery. Kubernetes offers service discovery out-of-the-box and the service mesh will be able to leverage this to populate its own service registry for name resolution. As new services come online, their endpoints are automatically added to the service registry.

Service-to-service communication

As discussed in the architecture section above, service-to-service invocation is made possible by the service proxy that sits alongside the microservice. Coupled with the service discovery feature, you’ll always have the most up-to-date information for microservice endpoints participating in the service mesh. Communications flows through the service proxies (again, no direct communication between microservices) and additional capabilities are added to enhance and/or secure communications.

Security

Kubernetes allows open and unencrypted traffic between services by default. With a service mesh in place, service-to-service traffic can be encrypted using Mutual TLS (mTLS). Typically the control plane will generate certificates for every service in the mesh and distribute them to each service proxy. When microservices attempt to communicate with each other, the service proxy verifies and authenticates the identity of the other microservice on one end of the communication then encrypts the traffic so that only the microservice on the other end of the communication will be able to decrypt the message.

Traffic within the cluster (East/West) is not all that a service mesh can secure. It can also secure traffic that is coming into your cluster from the outside (North/South) through your ingress controller. It’s fairly common to see TLS termination at the ingress. When a service mesh is made aware of the ingress, it can also verify, authenticate, and re-encrypt traffic from your ingress to the backend microservice to enable full end-to-end encryption.

Resiliency

A service mesh can also help you improve resiliency by implementing features such as automatic retries, deadlines, circuit breakers, and fault injections. Not all service meshes will support every feature so you will need to consult each vendor’s documentation. However, most service meshes allow you to configure retry logic. This gives you the flexibility to define groups of microservices and define how it will attempt to recover from failures. Some configuration options include number of retries and how long to back-off before retrying. Additionally, circuit breaking is a common resiliency pattern that is implemented to prevent cascading failures throughout the rest of the system.

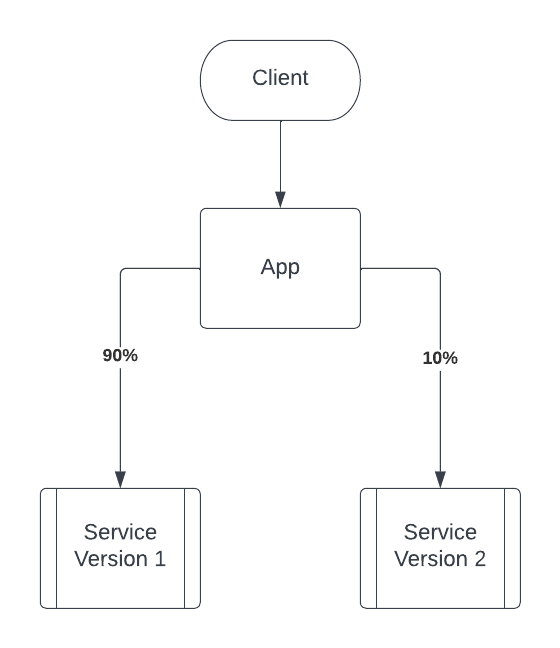

Traffic routing

Along the lines of resiliency, a service mesh can help with traffic routing and latency-aware load balancing. The traffic routing or traffic splitting feature also enables you to implement canary or blue/green deployment strategies. Using the traffic split configuration, you can specify how much traffic you want to direct to different versions of your microservice.

Observability

Last but not least we have observability. Out-of-the-box, a service mesh enables you to collect telemetry and metrics from all microservices participating in the service mesh. Most service meshes will enable you to track what is known as the four golden signals including:

- Latency: time it takes to receive a reply

- Traffic: demand on a system such as requests per second

- Errors: request error rate

- Saturation: CPU/memory utilization in relation to their thresholds

Another challenge with distributed systems is being able to trace a client request through the entire call chain of services. However, a service mesh can integrate with distributed tracing systems such as Jaeger or Zipkin to make this easier. Note this often requires some modification to application code to add a special trace identifiers to each request.

What are some new problems that service mesh can introduce?

A service mesh can make complex microservices architectures easier to manage, but the added layer of infrastructure adds new complexities. With more components added to the overall architecture, it can become harder for folks to understand the architecture and troubleshoot. There’s always a tradeoff…

Additionally, with the service proxy injected into each pod, more compute resources and network hops are required as traffic flows from the service container to the service proxy and mTLS handshakes are made between service proxies; this can also result in increased network latency.

So do you need a service mesh?

Going back to the original theme of this article… do you need a service mesh? are the trade-offs worth it? Well, it depends… 😅

There are valid reasons why you may not need a service mesh. Perhaps your service topology is not deeply nested to warrant an additional layer of infrastructure. Perhaps you can get away with applying Kubernetes network policies to restrict traffic to pods. Perhaps an ingress controller with TLS off-loading is sufficient for your security requirements; there is enough overlap with ingress particularly with resilience and observability to warrant its use over a service mesh.

On the flip side, if you need complete control of all communication between microservices and be able to provide end-to-end encryption of traffic between microservices, then yes, you will need a service mesh. If your microservices topology is deeply nested (with services relying on other services) and you need to improve the overall resiliency without modifying the app, then yes, you should consider a service mesh.

One other option that is worth mentioning is Dapr. Dapr is a microservices building block that developers can use to develop microservices. There is a bit of overlap between Dapr and service meshes and the Dapr team has done a good job of comparing the two here. The biggest takeaway when comparing the two is that Dapr does not provide traffic routing/splitting. So if you need these capabilities, then yes, you will need a service mesh.

What to look for in a service mesh

Okay, so you need a service mesh… the next step is to figure out which one to implement. There are many available within the Cloud Native Landscape. You should investigate each make a decision based on your needs.

Here are some things you should consider when selecting a service mesh to implement:

- Can the control plane be deployed in the cluster or should it be external to the cluster?

- For the data plane, is a per service proxy (sidecar) sufficient or do you need a per host proxy (sidecar-less)?

- Does your service mesh need to span across multiple clusters?

- Does your service mesh need to extend outside of the cluster and include VMs?

- Does the service mesh need to work with Gateway API?

- How much of the SMI spec does the service mesh support?

- How easy is it to onboard? Does the service mesh support incremental onboarding?

Looking ahead

Service mesh technology may be shifting towards Gateway API with the GAMMA Initiative. Others in the community are exploring alternative data plane implementations with sidecar-less architectures, eBPF, and even WebAssembly modules. There’s a lot happening in this space and it will be interesting to see how it all plays out.

Get hands-on

If you’d like to go a bit deeper into service mesh technology, be sure to check out the resources listed below then head over to the Open Service Mesh with Azure Kubernetes Service lab to get hands-on and see a service mesh in action! Open Service Mesh is an open-source, lightweight service mesh that is easy to install and operate, so I encourage you to take it for a spin 🚀

As always, let me know what you think in the comments below or via social channels including Twitter, Mastodon, or Linkedin.

Til next time, cheers!

Resources

- Hands-on Lab: Open Service Mesh with Azure Kubernetes Service (Bicep)

- Hands-on Lab: Open Service Mesh with Azure Kubernetes Service (Terraform)

- Open Service Mesh

- Service mesh: A critical component of the cloud native stack

- What is Service Mesh and Why Do We Need It?

- What’s the big deal with service meshes? Think of them as SDN at Layer 7

- What is a service mesh?

- Service Mesh: Past, Present and Future